The Mechanics of Memory Mapping and Thread Safety

Related Articles: The Mechanics of Memory Mapping and Thread Safety

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to The Mechanics of Memory Mapping and Thread Safety. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

The Mechanics of Memory Mapping and Thread Safety

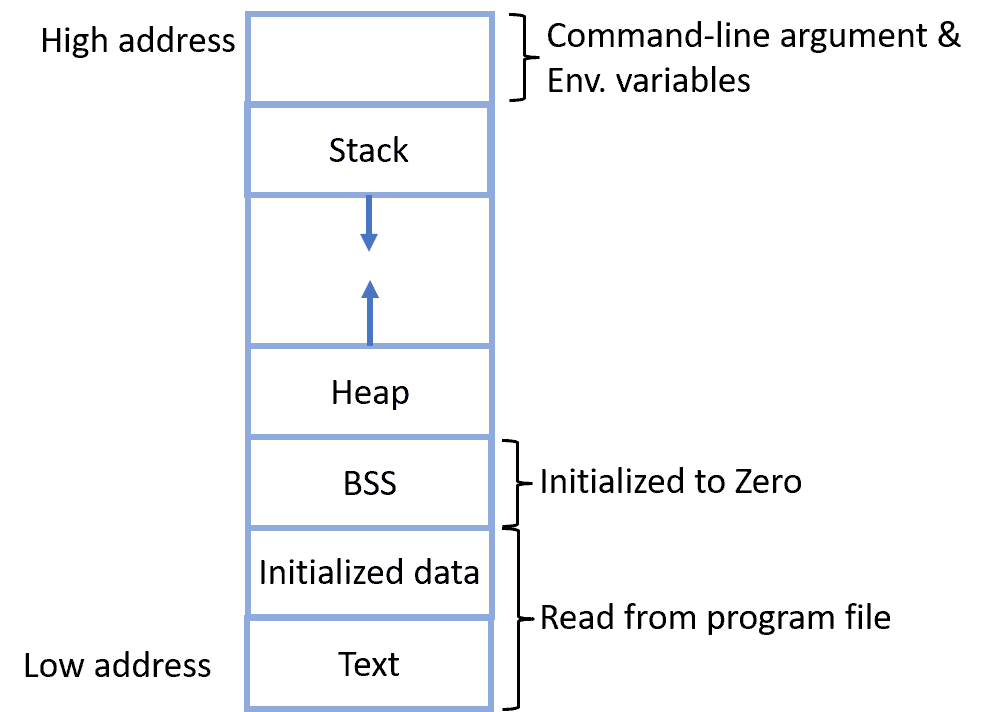

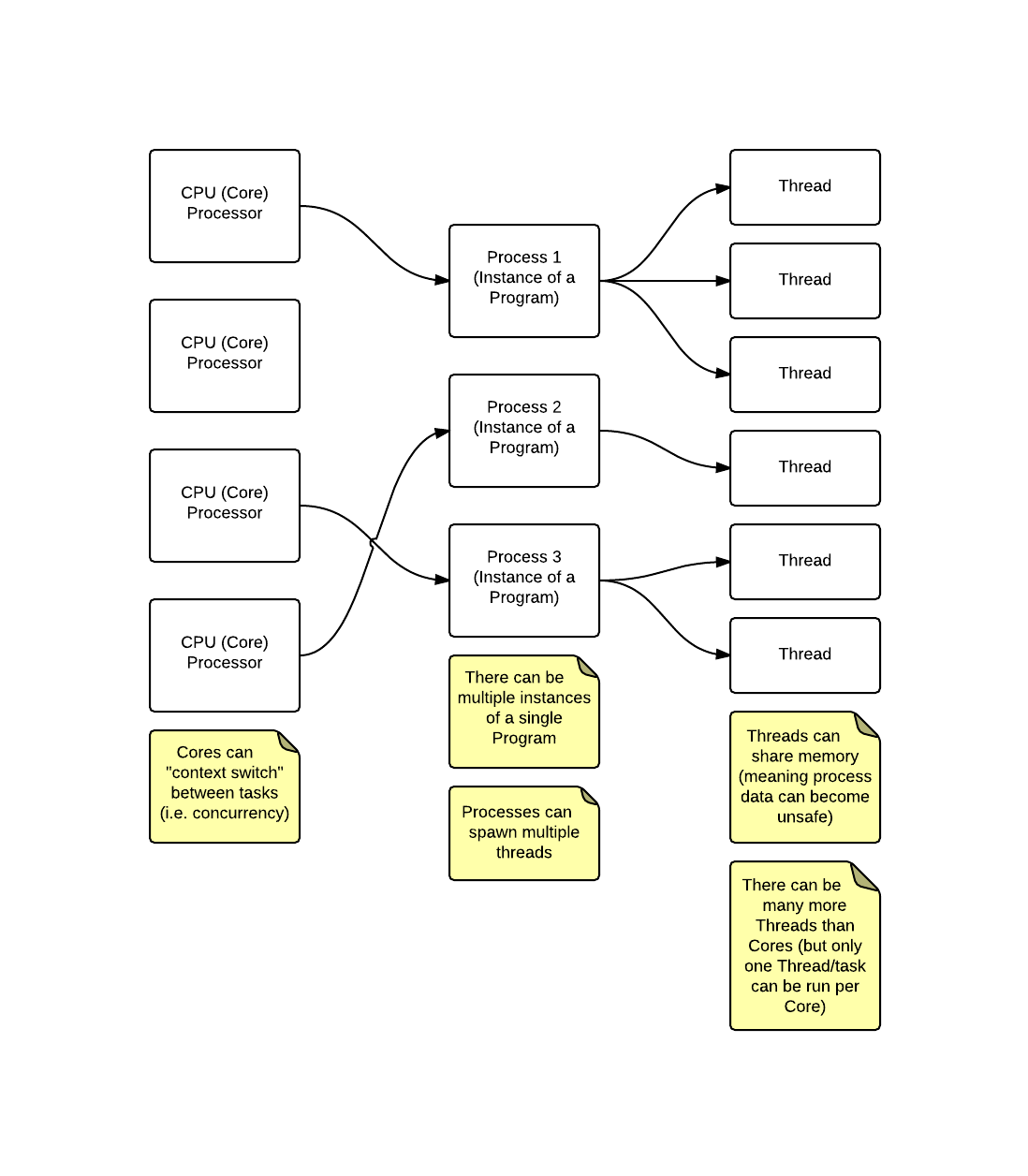

Memory mapping, or mmap, is a powerful technique in operating systems that allows processes to directly access files and other resources in memory. This approach offers numerous benefits over traditional file I/O, including:

- Efficiency: By mapping files into memory, processes eliminate the overhead of system calls for reading and writing, leading to significant performance gains.

- Shared Memory: mmap facilitates shared memory between processes, enabling efficient communication and data sharing without the need for complex inter-process communication mechanisms.

- Direct Access: mmap grants direct access to file data within the process’s address space, eliminating the need for buffering and copying, further enhancing performance.

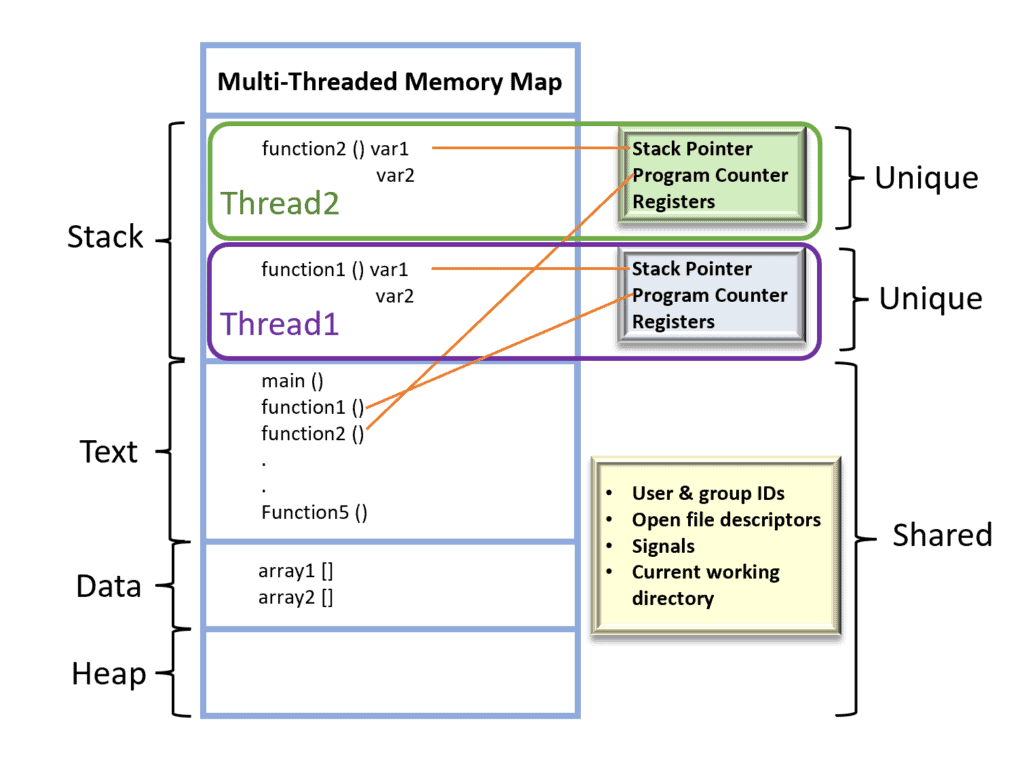

However, the inherent nature of memory mapping poses a significant challenge: thread safety. When multiple threads within a process attempt to access the same memory-mapped region concurrently, data corruption and race conditions can arise. This is because threads operate independently, and without proper synchronization mechanisms, their actions can interfere with each other, leading to unpredictable and often catastrophic results.

Understanding the Threat of Data Races

Consider a scenario where two threads attempt to modify the same data within a memory-mapped file. Thread A reads the current value, performs a calculation, and writes the updated value back. Simultaneously, Thread B performs the same operations. Without any synchronization, the following scenario can occur:

- Thread A reads the value.

- Thread B reads the value.

- Thread A performs the calculation and writes the updated value.

- Thread B performs the calculation and writes the updated value.

The final value written by Thread B will overwrite the value written by Thread A, leading to data corruption. This scenario highlights the critical need for synchronization mechanisms to ensure thread safety when working with memory-mapped files.

Strategies for Thread-Safe Memory Mapping

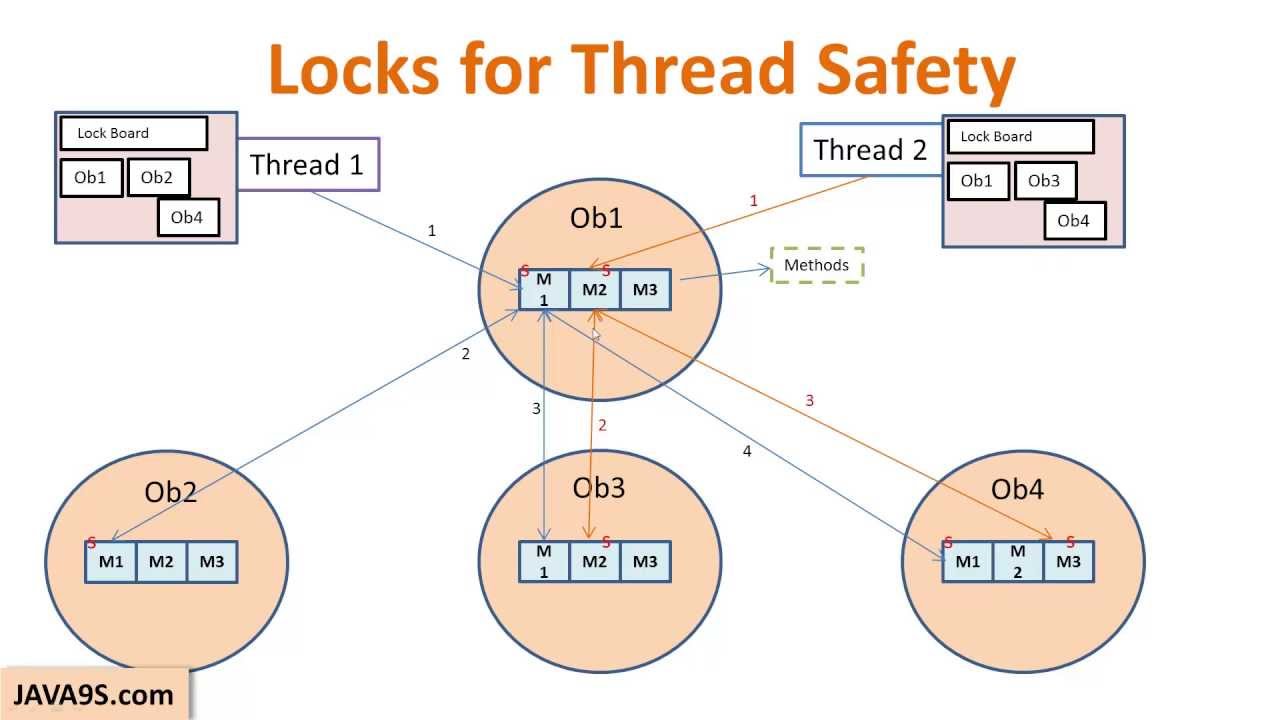

To mitigate the risks of data races and ensure thread safety, several strategies can be employed:

1. Mutexes: Mutexes provide a simple and effective way to protect critical sections of code that access shared resources. A mutex acts as a lock, allowing only one thread at a time to access the protected region. By acquiring the mutex before accessing the memory-mapped region and releasing it after completion, threads can ensure that their operations are atomic and prevent data corruption.

2. Semaphores: Semaphores offer a more flexible approach to synchronization, allowing for controlling access to a shared resource based on a counter. They can be used to limit the number of threads accessing a memory-mapped region concurrently, ensuring that the system does not become overloaded.

3. Condition Variables: Condition variables enable threads to wait for specific conditions to be met before proceeding. This is particularly useful when threads need to coordinate their actions based on changes within the memory-mapped region. For example, a thread might wait on a condition variable until another thread signals that new data has been written to the memory-mapped file.

4. Atomic Operations: Atomic operations are fundamental building blocks for thread safety. They guarantee that operations on shared memory locations are performed as a single, indivisible unit, preventing data races. Examples include atomic increments, decrements, and read-modify-write operations.

5. Thread-Local Storage: Thread-local storage (TLS) provides each thread with its own private storage space. By using TLS, threads can avoid sharing memory locations and eliminate the need for synchronization altogether. However, TLS is only suitable for scenarios where each thread requires its own copy of the data.

Choosing the Right Approach

The choice of synchronization technique depends on the specific requirements of the application. For simple scenarios where only one thread needs to access the memory-mapped region at a time, mutexes offer a straightforward solution. For more complex scenarios involving multiple threads and resource limitations, semaphores and condition variables provide greater flexibility. Atomic operations are essential for ensuring thread safety in critical sections of code, while TLS is best suited for scenarios where each thread needs its own copy of the data.

The Importance of Thread Safety in Memory Mapping

Ensuring thread safety in memory mapping is paramount for several reasons:

- Data Integrity: Preventing data corruption is essential for the correct functioning of applications. Unprotected shared memory can lead to inconsistent data, unpredictable behavior, and potentially catastrophic system failures.

- Performance: While memory mapping offers significant performance gains, improper synchronization can introduce bottlenecks and delays, negating the benefits of direct memory access.

- Reliability: Ensuring thread safety enhances the reliability of applications by preventing race conditions and other synchronization issues that can lead to unpredictable behavior and crashes.

- Maintainability: Well-structured code with proper synchronization mechanisms is easier to maintain and debug. It allows developers to understand the flow of data and identify potential synchronization issues more readily.

FAQs

1. Is it necessary to synchronize access to all memory-mapped regions?

Not necessarily. If a memory-mapped region is accessed exclusively by a single thread, synchronization is not required. However, if multiple threads share access to the same memory-mapped region, synchronization is essential to prevent data races.

2. What are the common pitfalls associated with memory mapping and thread safety?

Common pitfalls include:

- Incorrect synchronization: Using inadequate or improperly implemented synchronization mechanisms can lead to data corruption and race conditions.

- Deadlocks: Deadlocks can occur when two or more threads are waiting for each other to release resources, resulting in a standstill.

- Starvation: Starvation occurs when a thread is repeatedly denied access to a shared resource, preventing it from making progress.

3. How can I identify potential synchronization issues in my code?

Several tools and techniques can help identify synchronization issues, including:

- Static analysis: Static analysis tools can detect potential synchronization problems in code without executing it.

- Dynamic analysis: Dynamic analysis tools monitor the execution of code to identify synchronization issues during runtime.

- Debugging tools: Debuggers allow developers to step through code and examine the state of variables and threads to identify synchronization problems.

4. What are the best practices for thread-safe memory mapping?

- Use appropriate synchronization mechanisms: Choose the appropriate synchronization mechanism based on the specific requirements of the application.

- Minimize the size of critical sections: Keep the code sections that access shared resources as small as possible to reduce the risk of data races.

- Use atomic operations where possible: Atomic operations offer a robust and efficient way to ensure thread safety in critical sections of code.

- Test thoroughly: Thoroughly test the application to ensure that it behaves correctly under concurrent access scenarios.

Tips

- Document synchronization mechanisms: Clearly document the synchronization mechanisms used in the code to facilitate understanding and maintenance.

- Use a consistent approach to synchronization: Adopt a consistent approach to synchronization across the entire application to reduce the risk of errors.

- Consider using thread-safe data structures: Many standard data structures, such as queues and lists, are available in thread-safe versions, eliminating the need for manual synchronization.

Conclusion

Memory mapping is a powerful technique that offers significant performance advantages. However, its inherent nature necessitates careful consideration of thread safety. By understanding the risks associated with concurrent access to memory-mapped regions and implementing appropriate synchronization mechanisms, developers can ensure data integrity, improve performance, and enhance the reliability of their applications.

![[Python] GIL, Global interpreter Lock : 네이버 블로그](https://media.geeksforgeeks.org/wp-content/uploads/Two-threads-accessing-the-same-memory.jpg)

Closure

Thus, we hope this article has provided valuable insights into The Mechanics of Memory Mapping and Thread Safety. We hope you find this article informative and beneficial. See you in our next article!